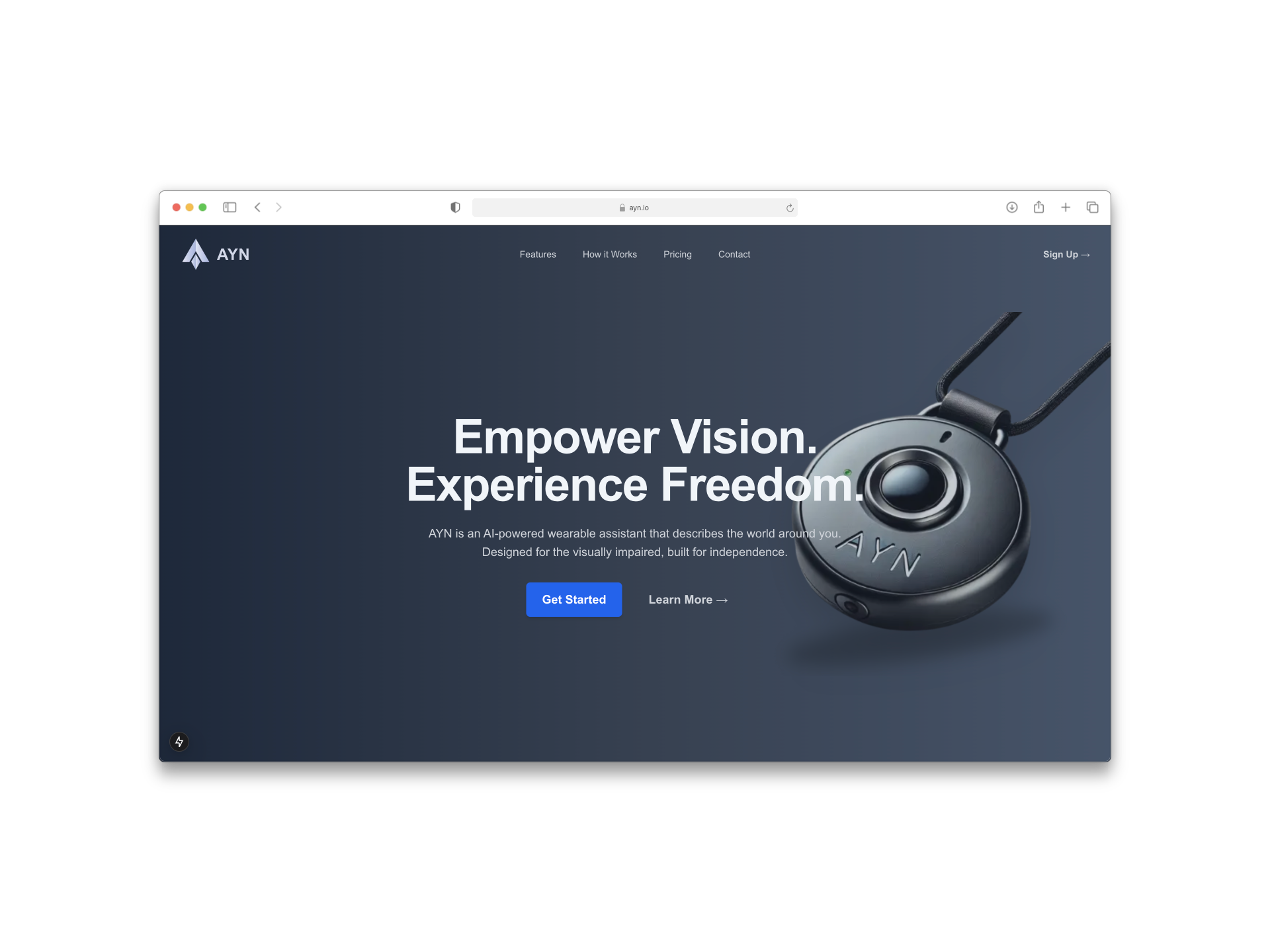

AYN - AI-Powered Visual Assistant

An assistance app to help visually impaired individuals with real time data about their surroundings

Using a camera feed, the app detects objects, estimates their distance, and instantly generates clear audio descriptions — so users can “see” through sound.

Why we built it:

In many parts of the world, visually impaired individuals have little access to affordable assistive technology. We wanted to create something accessible, fast, and portable; using AI to bridge the gap between the environment and the user’s understanding of it.

Challenges & how we solved them:

- Real-time accuracy: We integrated YOLOv5 for object detection and MiDaS for depth estimation, making sure they worked together smoothly with low latency.

- Making it truly helpful: We used GPT-4o to turn raw object data into natural, human-friendly descriptions and OpenAI’s TTS API to deliver those descriptions instantly as audio.

- Accessibility-first UI: Built with Next.js, the interface uses large buttons, voice feedback, and responsive design so it works across devices; even on phones with low processing power.

Technical highlights:

- Multi-model AI pipeline combining YOLOv5 + MiDaS for spatial awareness

- Depth-based prioritization so closer objects get described first

- WebRTC-powered video processing for low-latency performance

- Cross-platform compatibility for web and mobile browsers